Softmax: O(ed)

How much information can attention mechanisms store? Using relative error analysis, we show that linear attention scales linearly with head dimension while softmax attention scales exponentially with head dimension.

I am a Member of Technical Staff at Thinking Machines Lab, where I work on pre-training science.

I received my Ph.D. from MIT EECS, advised by Prof. Song Han. My research focuses on efficient algorithms and systems for large foundation models.

Previously, I graduated from Tsinghua University with a B.Eng. in Computer Science and a B.Econ. in Finance (with honors), and spent 2020–2021 as a visiting researcher at Stanford University.

How much information can attention mechanisms store? Using relative error analysis, we show that linear attention scales linearly with head dimension while softmax attention scales exponentially with head dimension.

A mathematical explanation of why sliding window attention's effective receptive field is O(W) rather than the theoretical O(LW), regardless of depth, due to information dilution and exponential decay from residual connections.

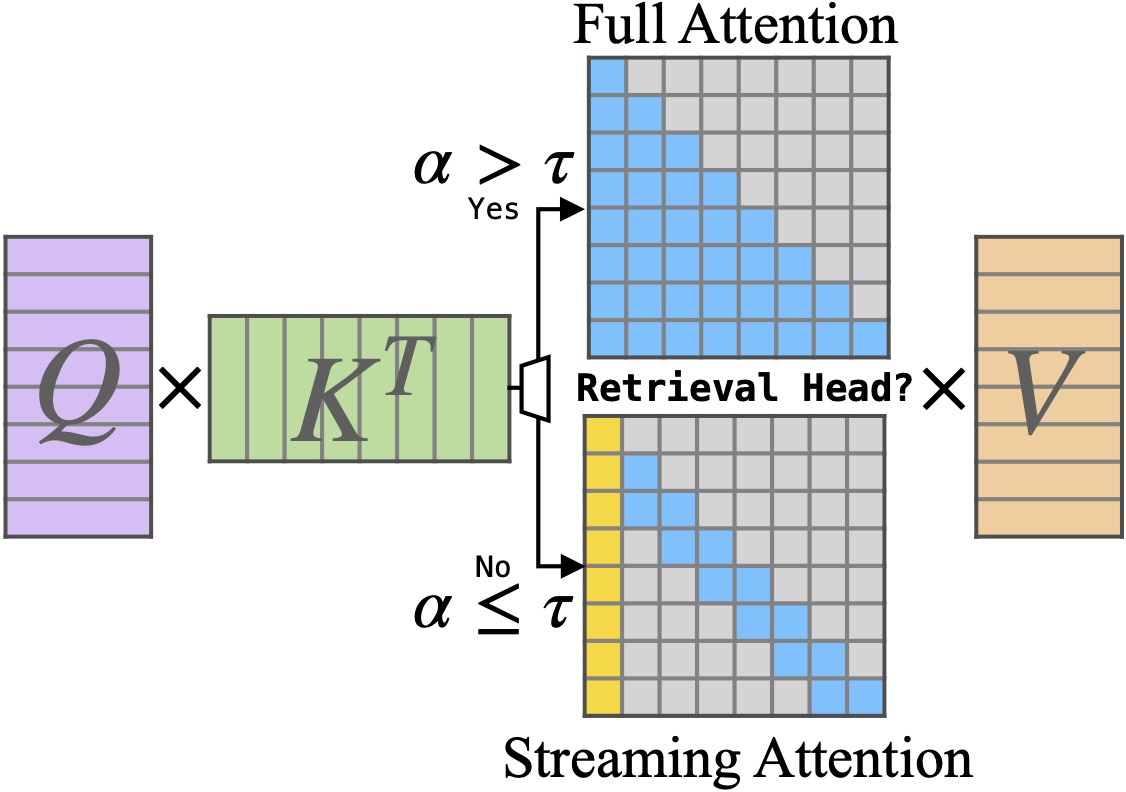

A statistical model revealing how block sparse attention achieves efficiency and accuracy through learned similarity gaps.

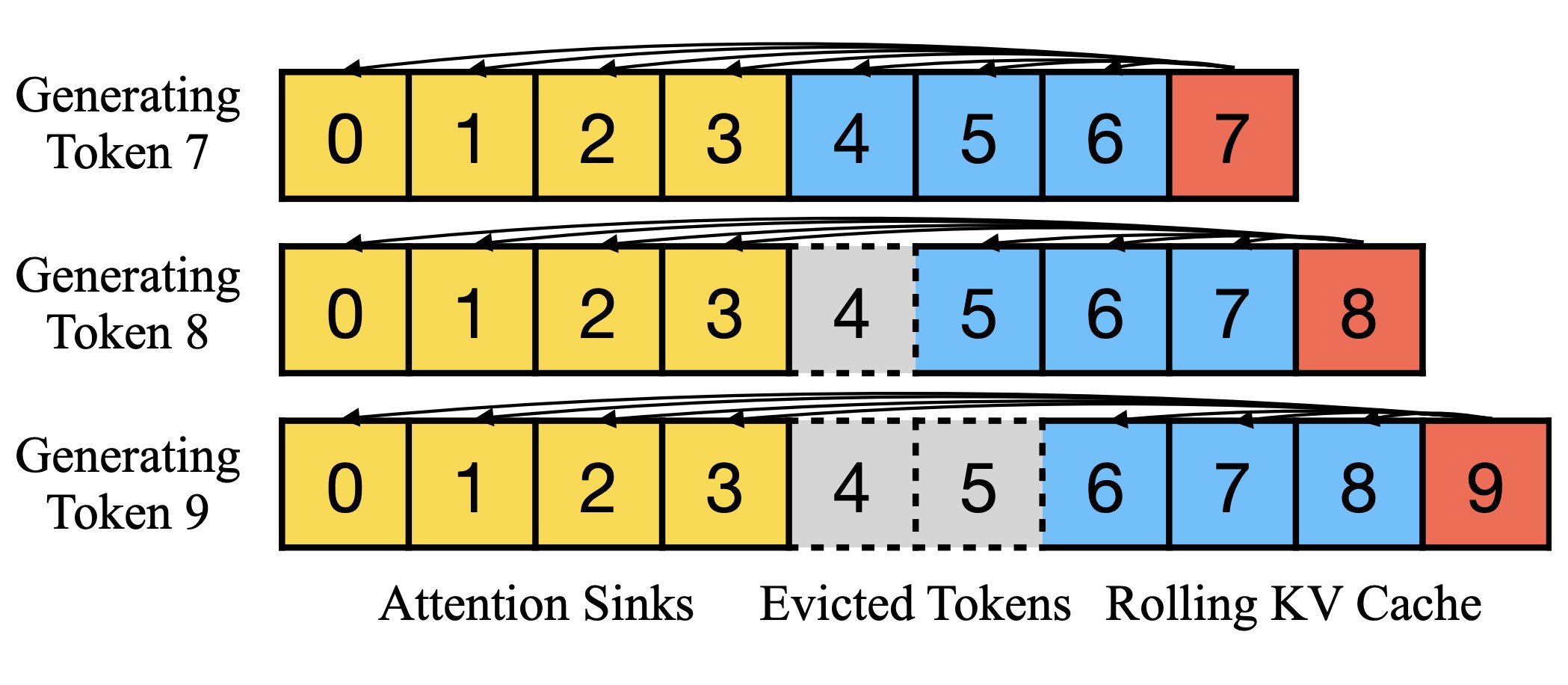

We discovered that attention sinks—where models park unused attention on initial tokens—are crucial for language model stability. Without them, models catastrophically fail when processing long conversations, but with attention sinks, they maintain stable performance across millions of tokens.